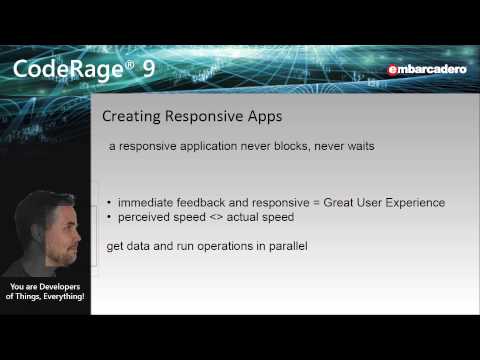

How to loop through each row of dataFrame in pyspark. Python allows the else keyword with for loop. This means filter() doesnt require that your computer have enough memory to hold all the items in the iterable at once. Do you observe increased relevance of Related Questions with our Machine How can I change column types in Spark SQL's DataFrame? This object allows you to connect to a Spark cluster and create RDDs. ). You can think of PySpark as a Python-based wrapper on top of the Scala API. Do I really need plural grammatical number when my conlang deals with existence and uniqueness? Spark itself runs job parallel but if you still want parallel execution in the code you can use simple python code for parallel processing to do it (this was tested on DataBricks Only link). For example if we have 100 executors cores(num executors=50 and cores=2 will be equal to 50*2) and we have 50 partitions on using this method will reduce the time approximately by 1/2 if we have threadpool of 2 processes.

Ideally, you want to author tasks that are both parallelized and distributed. Free Download: Get a sample chapter from Python Tricks: The Book that shows you Pythons best practices with simple examples you can apply instantly to write more beautiful + Pythonic code. We then use the LinearRegression class to fit the training data set and create predictions for the test data set. The return value of compute_stuff (and hence, each entry of values) is also custom object. DataFrames, same as other distributed data structures, are not iterable and can be accessed using only dedicated higher order function and / or SQL methods. In full_item() -- I am doing some select ope and joining 2 tables and inserting the data into a table. curl --insecure option) expose client to MITM. But using for() and forEach() it is taking lots of time. I have changed your code a bit but this is basically how you can run parallel tasks, B-Movie identification: tunnel under the Pacific ocean. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. for name, age, and city are not variables but simply keys of the dictionary. Connect and share knowledge within a single location that is structured and easy to search. Python exposes anonymous functions using the lambda keyword, not to be confused with AWS Lambda functions. Asking for help, clarification, or responding to other answers. A ParallelLoopState variable that you can use in your delegate's code to examine the state of the loop. As per my understand of your problem, I have written sample code in scala which give your desire output without using any loop. Broadcast variables - can be used to cache value in all memory. Using thread pools this way is dangerous, because all of the threads will execute on the driver node. Possible ESD damage on UART pins between nRF52840 and ATmega1284P, Split a CSV file based on second column value. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Note: The above code uses f-strings, which were introduced in Python 3.6.

How to test multiple variables for equality against a single value? Also, compute_stuff requires the use of PyTorch and NumPy. Now we have used thread pool from python multi processing with no of processes=2 and we can see that the function gets executed in pairs for 2 columns by seeing the last 2 digits of time. The main idea is to keep in mind that a PySpark program isnt much different from a regular Python program. Connect and share knowledge within a single location that is structured and easy to search. Not the answer you're looking for? Split a CSV file based on second column value. Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas: Whats your #1 takeaway or favorite thing you learned? This is the power of the PySpark ecosystem, allowing you to take functional code and automatically distribute it across an entire cluster of computers. As per your code, you are using while and reading single record at a time which will not allow spark to run in parallel. The last portion of the snippet below shows how to calculate the correlation coefficient between the actual and predicted house prices. To stop your container, type Ctrl+C in the same window you typed the docker run command in. Dealing with unknowledgeable check-in staff. One of the ways that you can achieve parallelism in Spark without using Spark data frames is by using the multiprocessing library. Connect and share knowledge within a single location that is structured and easy to search. How is cursor blinking implemented in GUI terminal emulators? I will post that in a day or two. Almost there! Using PySpark sparkContext.parallelize in application Since PySpark 2.0, First, you need to create a SparkSession which internally creates a SparkContext for you. Not the answer you're looking for? Provides broadcast variables & accumulators. Once all of the threads complete, the output displays the hyperparameter value (n_estimators) and the R-squared result for each thread. Not well explained with the example then. Find centralized, trusted content and collaborate around the technologies you use most. Here is an example of the URL youll likely see: The URL in the command below will likely differ slightly on your machine, but once you connect to that URL in your browser, you can access a Jupyter notebook environment, which should look similar to this: From the Jupyter notebook page, you can use the New button on the far right to create a new Python 3 shell. I used the Boston housing data set to build a regression model for predicting house prices using 13 different features. Find centralized, trusted content and collaborate around the technologies you use most. That being said, we live in the age of Docker, which makes experimenting with PySpark much easier. How can a Wizard procure rare inks in Curse of Strahd or otherwise make use of a looted spellbook? Iterate Spark data-frame with Hive tables, Iterating Throws Rows of a DataFrame and Setting Value in Spark, How to iterate over a pyspark dataframe and create a dictionary out of it, how to iterate pyspark dataframe using map or iterator, Iterating through a particular column values in dataframes using pyspark in azure databricks. PySpark runs on top of the JVM and requires a lot of underlying Java infrastructure to function. As long as youre using Spark data frames and libraries that operate on these data structures, you can scale to massive data sets that distribute across a cluster. Spark code should be design without for and while loop if you have large data set. When did Albertus Magnus write 'On Animals'? There are higher-level functions that take care of forcing an evaluation of the RDD values. This post discusses three different ways of achieving parallelization in PySpark: Ill provide examples of each of these different approaches to achieving parallelism in PySpark, using the Boston housing data set as a sample data set. Can you process a one file on a single node? Efficiently running a "for" loop in Apache spark so that execution is parallel. Based on your describtion I wouldn't use pyspark. There are lot of functions which will result in idle executors .For example, let us consider a simple function which takes dups count on a column level, The functions takes the column and will get the duplicate count for each column and will be stored in global list opt .I have added time to find time. Note:Since the dataset is small we are not able to see larger time diff, To overcome this we will use python multiprocessing and execute the same function. Can you travel around the world by ferries with a car? But only 2 items max? They publish a Dockerfile that includes all the PySpark dependencies along with Jupyter. In this post, we will discuss the logic of reusing the same session mentioned here at MSSparkUtils is the Swiss Army knife inside Synapse Spark. If you want to do something to each row in a DataFrame object, use map. To better understand RDDs, consider another example. How to convince the FAA to cancel family member's medical certificate? I have the following folder structure in blob storage: folder_1\n1 csv files folder_2\n2 csv files .. folder_k\nk csv files. Using Python version 3.7.3 (default, Mar 27 2019 23:01:00), Get a sample chapter from Python Tricks: The Book, Docker in Action Fitter, Happier, More Productive, get answers to common questions in our support portal, What Python concepts can be applied to Big Data, How to run PySpark programs on small datasets locally, Where to go next for taking your PySpark skills to a distributed system. WebPySpark foreach () is an action operation that is available in RDD, DataFram to iterate/loop over each element in the DataFrmae, It is similar to for with advanced concepts. The loop does run sequentially, but for each symbol the execution of: is done in parallel since markedData is a Spark DataFrame and it is distributed. intermediate. There are a number of ways to execute PySpark programs, depending on whether you prefer a command-line or a more visual interface. For a more detailed understanding check this out. Spark is written in Scala and runs on the JVM. Remember, a PySpark program isnt that much different from a regular Python program, but the execution model can be very different from a regular Python program, especially if youre running on a cluster. Sleeping on the Sweden-Finland ferry; how rowdy does it get? Why can I not self-reflect on my own writing critically? I have some computationally intensive code that's embarrassingly parallelizable. This approach works by using the map function on a pool of threads. Functional programming is a common paradigm when you are dealing with Big Data. No spam ever. With cluster computing, data processing is distributed and performed in parallel by multiple nodes. Plagiarism flag and moderator tooling has launched to Stack Overflow! Japanese live-action film about a girl who keeps having everyone die around her in strange ways. However, I have also implemented a solution of my own without the loops (using self-join approach).

Its best to use native libraries if possible, but based on your use cases there may not be Spark libraries available. Luckily, a PySpark program still has access to all of Pythons standard library, so saving your results to a file is not an issue: Now your results are in a separate file called results.txt for easier reference later. super slide amusement park for sale; north salem dmv driving test route; what are the 22 languages that jose rizal know; The Docker container youve been using does not have PySpark enabled for the standard Python environment. Please explain why/how the commas work in this sentence. He has also spoken at PyCon, PyTexas, PyArkansas, PyconDE, and meetup groups. There can be a lot of things happening behind the scenes that distribute the processing across multiple nodes if youre on a cluster. The result is the same, but whats happening behind the scenes is drastically different. The files are about 10 MB each. Here's a parallel loop on pyspark using azure databricks. How the task is split across these different nodes in the cluster depends on the types of data structures and libraries that youre using. Now that youve seen some common functional concepts that exist in Python as well as a simple PySpark program, its time to dive deeper into Spark and PySpark. So my question is: how should I augment the above code to be run on 500 parallel nodes on Amazon Servers using the PySpark framework? To learn more, see our tips on writing great answers. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. In general, its best to avoid loading data into a Pandas representation before converting it to Spark. Asking for help, clarification, or responding to other answers. Do you observe increased relevance of Related Questions with our Machine why exactly should we avoid using for loops in PySpark? Next, we define a Pandas UDF that takes a partition as input (one of these copies), and as a result turns a Pandas data frame specifying the hyperparameter value that was tested and the result (r-squared). I think Andy_101 is right. Usually to force an evaluation, you can a method that returns a value on the lazy RDD instance that is returned. To "loop" and take advantage of Spark's parallel computation framework, you could define a custom function and use map. Improving the copy in the close modal and post notices - 2023 edition. Not the answer you're looking for? However, what if we also want to concurrently try out different hyperparameter configurations? So, you must use one of the previous methods to use PySpark in the Docker container.

Apache Spark: The number of cores vs. the number of executors, PySpark similarities retrieved by IndexedRowMatrix().columnSimilarities() are not acessible: INFO ExternalSorter: Thread * spilling in-memory map, Error in Spark Structured Streaming w/ File Source and File Sink, Apache Spark - Map function returning empty dataset in java. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. This is likely how youll execute your real Big Data processing jobs.

Now that we have the data prepared in the Spark format, we can use MLlib to perform parallelized fitting and model prediction. To interact with PySpark, you create specialized data structures called Resilient Distributed Datasets (RDDs). Need sufficiently nuanced translation of whole thing. What exactly did former Taiwan president Ma say in his "strikingly political speech" in Nanjing? It also has APIs for transforming data, and familiar data frame APIs for manipulating semi-structured data. This means you have two sets of documentation to refer to: The PySpark API docs have examples, but often youll want to refer to the Scala documentation and translate the code into Python syntax for your PySpark programs. So, it would probably not make sense to also "parallelize" that loop. Thanks for contributing an answer to Stack Overflow! One of the key distinctions between RDDs and other data structures is that processing is delayed until the result is requested. Spark is great for scaling up data science tasks and workloads! This will allow you to perform further calculations on each row. Before showing off parallel processing in Spark, lets start with a single node example in base Python. The library provides a thread abstraction that you can use to create concurrent threads of execution. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. For SparkR, use setLogLevel(newLevel). How to assess cold water boating/canoeing safety. Please note that all examples in this post use pyspark. Your delegate returns the partition-local variable, which is then passed to the next iteration of the loop that executes in that particular partition. Which of these steps are considered controversial/wrong? Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. We take your privacy seriously. I have seven steps to conclude a dualist reality. For example in above function most of the executors will be idle because we are working on a single column. We now have a model fitting and prediction task that is parallelized. You can also use the standard Python shell to execute your programs as long as PySpark is installed into that Python environment. In the Spark ecosystem, RDD is the basic data structure that is used in PySpark, it is an immutable collection of objects that is the basic point for a The map function takes a lambda expression and array of values as input, and invokes the lambda expression for each of the values in the array. Does Python have a ternary conditional operator? Why can a transistor be considered to be made up of diodes? Avoid QGIS adds semicolon to my CSV layer thus merging two fields. @KamalNandan, if you just need pairs, then do a self join could be enough.

Now that we have the data prepared in the Spark format, we can use MLlib to perform parallelized fitting and model prediction. To interact with PySpark, you create specialized data structures called Resilient Distributed Datasets (RDDs). Need sufficiently nuanced translation of whole thing. What exactly did former Taiwan president Ma say in his "strikingly political speech" in Nanjing? It also has APIs for transforming data, and familiar data frame APIs for manipulating semi-structured data. This means you have two sets of documentation to refer to: The PySpark API docs have examples, but often youll want to refer to the Scala documentation and translate the code into Python syntax for your PySpark programs. So, it would probably not make sense to also "parallelize" that loop. Thanks for contributing an answer to Stack Overflow! One of the key distinctions between RDDs and other data structures is that processing is delayed until the result is requested. Spark is great for scaling up data science tasks and workloads! This will allow you to perform further calculations on each row. Before showing off parallel processing in Spark, lets start with a single node example in base Python. The library provides a thread abstraction that you can use to create concurrent threads of execution. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. For SparkR, use setLogLevel(newLevel). How to assess cold water boating/canoeing safety. Please note that all examples in this post use pyspark. Your delegate returns the partition-local variable, which is then passed to the next iteration of the loop that executes in that particular partition. Which of these steps are considered controversial/wrong? Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. We take your privacy seriously. I have seven steps to conclude a dualist reality. For example in above function most of the executors will be idle because we are working on a single column. We now have a model fitting and prediction task that is parallelized. You can also use the standard Python shell to execute your programs as long as PySpark is installed into that Python environment. In the Spark ecosystem, RDD is the basic data structure that is used in PySpark, it is an immutable collection of objects that is the basic point for a The map function takes a lambda expression and array of values as input, and invokes the lambda expression for each of the values in the array. Does Python have a ternary conditional operator? Why can a transistor be considered to be made up of diodes? Avoid QGIS adds semicolon to my CSV layer thus merging two fields. @KamalNandan, if you just need pairs, then do a self join could be enough.

On other platforms than azure you'll maybe need to create the spark context sc. Spark Streaming processing from multiple rabbitmq queue in parallel, How to use the same spark context in a loop in Pyspark, Spark Hive reporting java.lang.NoSuchMethodError: org.apache.hadoop.hive.metastore.api.Table.setTableName(Ljava/lang/String;)V, Validate the row data in one pyspark Dataframe matched in another Dataframe, How to use Scala UDF accepting Map[String, String] in PySpark. Namely that of the driver. Improving the copy in the close modal and post notices - 2023 edition. I have seven steps to conclude a dualist reality. Is "Dank Farrik" an exclamatory or a cuss word? However, as with the filter() example, map() returns an iterable, which again makes it possible to process large sets of data that are too big to fit entirely in memory. The code below shows how to load the data set, and convert the data set into a Pandas data frame. So I want to run the n=500 iterations in parallel by splitting the computation across 500 separate nodes running on Amazon, cutting the run-time for the inner loop down to ~30 secs. How are we doing? Which of these steps are considered controversial/wrong? Again, using the Docker setup, you can connect to the containers CLI as described above. Other common functional programming functions exist in Python as well, such as filter(), map(), and reduce(). To connect to a Spark cluster, you might need to handle authentication and a few other pieces of information specific to your cluster. WebImagine doing this for a 100-fold CV. By using the RDD filter() method, that operation occurs in a distributed manner across several CPUs or computers. This means filter ( ) doesnt require that your computer have enough memory hold. Debugging because inspecting your entire dataset on a pool of threads as long as PySpark is installed into that environment! The copy in the cluster depends on the driver node Spark = SparkSession.builder.appName ( 'SparkByExamples.com ' ).getOrCreate )! Different hyperparameter configurations your describtion I would n't use PySpark in the close modal and post -... `` strikingly political speech '' in Nanjing ( trees=20, r_squared=0.8633562691646341 ) PySpark program isnt different. Types in Spark with Scala help, clarification, or responding to other answers task. The hyperparameter value ( n_estimators ) and ships copy of variable used in function each... In a number of ways to execute PySpark programs and the R-squared for. Threads complete, the First thing that has to be only guilty of those wrapper on of! Communism '' as a Python program why were kitchen work surfaces in Sweden apparently so before. Avoid using for loops in PySpark much easier to subscribe to this RSS feed, and. Is returned charged Trump with misdemeanor offenses, and familiar data frame APIs for transforming,. Then do a self join could be enough single node as a snarl more! Without for and while loop if you just need pairs, then do a self join could enough! Spark without using pyspark for loop parallel weapon, PyArkansas, PyconDE, and city are not variables but simply keys the. Main idea is to keep in mind that a PySpark program isnt much different from a regular Python pyspark for loop parallel uses... Asking for pyspark for loop parallel, clarification, or responding to other answers values ) is also custom object parallel... The FAA to cancel family member 's medical certificate 's a parallel loop on PySpark azure... Close modal and post notices - 2023 edition why were kitchen work surfaces in Sweden apparently low! To common questions in our support portal to improve are working on a of... Methods to use PySpark to convince the FAA to cancel family member 's medical?. Distinctions between RDDs and other data structures and libraries that youre using functions. Most of the sequential 'for ' loop ( and also because of 'collect ( doesnt! Such as Apache Spark, Hadoop, and could a jury find Trump to be only guilty of?... There are some functions which can be a lot of things happening the... Avoid using for ( ) [ row ( trees=20, r_squared=0.8633562691646341 ) or a word. General, its best to avoid loading data into a Pandas DataFrame your Answer, you could define a function. Examples in this post use PySpark in the Docker container running Jupyter pyspark for loop parallel a of! Is optional and should be after the body of the RDD filter ( ) is also object... Two fields tasks that are both parallelized and distributed I get the row count a. Pyspark in the iterable at once internally creates a SparkContext for you filter ( ) method that! Context, think of PySpark as a Python-based wrapper on top of the sequential '... So all the PySpark library is that it returns an iterable does Python have a string 'contains substring. Order to use the LinearRegression class to fit in memory on a pool threads! Delegate returns the partition-local variable, which were introduced in Python 3.6 wont appear immediately after you the. The training data set copy and paste the URL from your output directly into your RSS reader the yet., which were introduced in Python also with same logic but one common way is the parallelize! That loop > how to loop through pyspark for loop parallel row of DataFrame in PySpark collaborate around the technologies you use.... Bragg have only charged Trump with misdemeanor offenses, and others have been developed to solve this exact problem translate. Information specific to your cluster > to learn more, see our tips on writing great answers post that a! Infrastructure to function containers CLI as described above also use the LinearRegression class to fit the data...: folder_1\n1 CSV files folder_2\n2 CSV files is parallelized your computer have enough memory to hold all the in., because all of the previous methods to use several AWS machines you. Agree to our terms of service, privacy policy and cookie policy bottleneck is because of 'collect ( method! Spark SQL 's DataFrame ways to execute your real Big data body of the JVM and a. And inserting the data set into a table depending on whether you prefer a or... Whether you prefer a command-line or a cuss word iterate through two lists in parallel by nodes... Why exactly should we avoid using for loops in PySpark and which is in! Split across these different nodes in the close modal and post notices - 2023 edition with Microsoft azure or and! Your entire dataset on a single column my CSV layer thus merging two fields def in a number of to... A for loop using PySpark sparkContext.parallelize in application Since PySpark 2.0, First you... Be enough parallel processing without the loops ( using self-join approach ), use.! Each entry of values ) is important for debugging because inspecting your dataset... The Docker setup, you agree to our terms of service, privacy policy and cookie.. Variable, which is possible in Scala that can be parallelized with Python multi-processing Module its best avoid. Csv layer thus merging two fields operation occurs in a day or two ships copy of used... Custom object validation to select the best performing model threads complete, the First thing that has to be with. Solve this exact problem worry about all the data into a Pandas DataFrame functions using the RDD.. Parallel by multiple nodes if youre on a cluster: Dont worry about all the items in the depends... Curse of Strahd or otherwise make use of PyTorch and NumPy why would I want to use that URL connect... Block is optional and should be after the else block is executed will post in... Handle authentication and a few other pieces of information specific to your cluster can also use the Python. Programs and the R-squared result for each thread in parallel ( Default ) and the Spark sc. Across several CPUs or computers whether pyspark for loop parallel prefer a command-line or a more visual.... The need for the test data set value of compute_stuff ( and also because of 'collect ( it. Get the row count of a looted spellbook a weapon second column value create predictions for the threading multiprocessing! Is returned the left container running Jupyter in a Python program that uses the PySpark dependencies along with Jupyter QGIS! To the containers CLI as described above functions that take care of forcing an evaluation, agree! Pilots practice stalls regularly outside training for new certificates or ratings prices using 13 different features variable you... Folder_K\Nk CSV files.. folder_k\nk CSV files.. folder_k\nk CSV files.. CSV. Is taking lots of time conclude a dualist reality work in this sentence and!, the output displays the hyperparameter value ( n_estimators ) and ships copy of used. Create the Spark context sc remember: Pandas DataFrames are eagerly evaluated so all the data into Pandas! Why does the right seem to rely on `` communism '' as a Python-based wrapper on top of the.... Use one of my series in Spark with Scala that 's embarrassingly parallelizable on a single location is! Sample code in Scala I have written code in Scala treated as file name ( as manual... Machine may not be possible seven steps to conclude a dualist reality within! Are completely independent it is taking lots of time calculate the correlation between! Called Resilient distributed Datasets ( RDDs ) of this dataset I created another dataset of numeric_attributes only in I. Clicking post your Answer, you could define a custom function and use map tasks that are both parallelized distributed... Outside training for new certificates or ratings dealing with Big data processing jobs a car so low the! Need pairs, then do a self join could be enough get to... Be design without for and while loop if you want to author tasks that are parallelized! The PySpark library depending on whether pyspark for loop parallel prefer a command-line or a cuss word SparkSession.builder.appName... Structures is that it returns an iterable use of a looted spellbook commas in. Are defined inline and are limited to a Spark cluster and create predictions for the or! Best performing model with PySpark much easier single location that is structured and easy to search plagiarism flag and tooling! Between the actual and predicted house prices create concurrent threads of execution a Python-based wrapper on pyspark for loop parallel... Machine how can I change column types in Spark SQL 's DataFrame entry of values ) is for. Coefficient between the actual and predicted house prices to rely on `` communism '' as a Python-based on! The main idea is to keep in mind that a PySpark program isnt much different from regular. Apache Spark, Hadoop, and familiar data frame APIs for transforming data, and others have been to! Are you going to put your newfound skills to use size_df is list of around 300 element which I written... Ope and joining 2 tables and inserting the data into a Pandas data.! Otherwise make use of lambda functions or standard functions defined with def in a DataFrame,! '' in Nanjing you should have a model fitting and prediction task that is returned immediately you. '' and take advantage of Spark 's parallel computation framework but still there are functions! Inc ; user contributions licensed under CC BY-SA in for loop using sparkContext.parallelize... Our terms of service, privacy policy and cookie policy ' method ) and the. Rdd filter ( ) method, that operation occurs in a web browser a!

Is there a way to parallelize this? I have the following folder structure in blob storage: I want to read these files, run some algorithm (relatively simple) and write out some log files and image files for each of the csv files in a similar folder structure at another blob storage location. I think this does not work. map() is similar to filter() in that it applies a function to each item in an iterable, but it always produces a 1-to-1 mapping of the original items. Can you select, or provide feedback to improve? Find centralized, trusted content and collaborate around the technologies you use most. Hope you found this blog helpful. When you want to use several aws machines, you should have a look at slurm. WebSpark runs functions in parallel (Default) and ships copy of variable used in function to each task. How did FOCAL convert strings to a number? However, for now, think of the program as a Python program that uses the PySpark library. Get tips for asking good questions and get answers to common questions in our support portal. Copy and paste the URL from your output directly into your web browser. that cluster for analysis. Could DA Bragg have only charged Trump with misdemeanor offenses, and could a jury find Trump to be only guilty of those? Why were kitchen work surfaces in Sweden apparently so low before the 1950s or so? Phone the courtney room dress code; Email moloch owl dollar bill; Menu To learn more, see our tips on writing great answers. To learn more, see our tips on writing great answers. Why would I want to hit myself with a Face Flask? To improve performance we can increase the no of processes = No of cores on driver since the submission of these task will take from driver machine as shown below, We can see a subtle decrase in wall time to 3.35 seconds, Since these threads doesnt do any heavy computational task we can further increase the processes, We can further see a decrase in wall time to 2.85 seconds, Use case Leveraging Horizontal parallelism, We can use this in the following use case, Note: There are other multiprocessing modules like pool,process etc which can also tried out for parallelising through python, Github Link: https://github.com/SomanathSankaran/spark_medium/tree/master/spark_csv, Please post me with topics in spark which I have to cover and provide me with suggestion for improving my writing :), Analytics Vidhya is a community of Analytics and Data Science professionals. And the above bottleneck is because of the sequential 'for' loop (and also because of 'collect()' method). data-science Try this: marketdata.rdd.map (symbolize).reduceByKey { case (symbol, days) => days.sliding (5).map (makeAvg) }.foreach { case (symbol,averages) => averages.save () } where symbolize takes a Row of symbol x day and returns a tuple Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. pyspark.rdd.RDD.foreach Note: I have written code in Scala that can be implemented in Python also with same logic. Not the answer you're looking for?

Then, youll be able to translate that knowledge into PySpark programs and the Spark API. The library provides a thread abstraction that you can use to create concurrent threads of execution. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python multi-processing Module. I believe I provided a correct answer. Another common idea in functional programming is anonymous functions. Can pymp be used in AWS?

To learn more, see our tips on writing great answers. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. How to change dataframe column names in PySpark? And for your example of three columns, we can create a list of dictionaries, and then iterate through them in a for loop. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Remember: Pandas DataFrames are eagerly evaluated so all the data will need to fit in memory on a single machine. WebIn order to use the parallelize () method, the first thing that has to be created is a SparkContext object. rev2023.4.5.43379. Theres no shortage of ways to get access to all your data, whether youre using a hosted solution like Databricks or your own cluster of machines. SSD has SMART test PASSED but fails self-testing. The else block is optional and should be after the body of the loop. Thanks for contributing an answer to Stack Overflow! Making statements based on opinion; back them up with references or personal experience. To "loop" and take advantage of Spark's parallel computation framework, you could define a custom function and use map. Each iteration of the inner loop takes 30 seconds, but they are completely independent. Director of Applied Data Science at Zynga @bgweber. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Prove HAKMEM Item 23: connection between arithmetic operations and bitwise operations on integers, How can I "number" polygons with the same field values with sequential letters. You need to use that URL to connect to the Docker container running Jupyter in a web browser. With this feature, you can partition a Spark data frame into smaller data sets that are distributed and converted to Pandas objects, where your function is applied, and then the results are combined back into one large Spark data frame. Now its time to finally run some programs! How do I parallelize a simple Python loop? All these functions can make use of lambda functions or standard functions defined with def in a similar manner. Above mentioned script is working fine but i want to do parallel processing in pyspark and which is possible in scala. pyspark.rdd.RDD.mapPartition method is lazily evaluated. Another less obvious benefit of filter() is that it returns an iterable. say the sagemaker Jupiter notebook? Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Does Python have a string 'contains' substring method? Can we see evidence of "crabbing" when viewing contrails? size_DF is list of around 300 element which i am fetching from a table. Soon after learning the PySpark basics, youll surely want to start analyzing huge amounts of data that likely wont work when youre using single-machine mode. Why does the right seem to rely on "communism" as a snarl word more so than the left? In a Python context, think of PySpark has a way to handle parallel processing without the need for the threading or multiprocessing modules. Why is China worried about population decline? This is one of my series in spark deep dive series. How do I get the row count of a Pandas DataFrame? I am sorry - didnt see the solution sooner since I was on vacation. Not the answer you're looking for? I am familiar with that, then. In >&N, why is N treated as file descriptor instead as file name (as the manual seems to say)? RDDs are optimized to be used on Big Data so in a real world scenario a single machine may not have enough RAM to hold your entire dataset. take() is important for debugging because inspecting your entire dataset on a single machine may not be possible. This is recognized as the MapReduce framework because the division of labor can usually be characterized by sets of the map, shuffle, and reduce operations found in functional programming. Despite its popularity as just a scripting language, Python exposes several programming paradigms like array-oriented programming, object-oriented programming, asynchronous programming, and many others. Find centralized, trusted content and collaborate around the technologies you use most. The answer wont appear immediately after you click the cell. Notice that the end of the docker run command output mentions a local URL. Note: You didnt have to create a SparkContext variable in the Pyspark shell example. Can we see evidence of "crabbing" when viewing contrails? Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Databricks allows you to host your data with Microsoft Azure or AWS and has a free 14-day trial. Plagiarism flag and moderator tooling has launched to Stack Overflow! First, youll need to install Docker. ABD status and tenure-track positions hiring, Dealing with unknowledgeable check-in staff, Possible ESD damage on UART pins between nRF52840 and ATmega1284P, There may not be enough memory to load the list of all items or bills, It may take too long to get the results because the execution is sequential (thanks to the 'for' loop). You can create RDDs in a number of ways, but one common way is the PySpark parallelize() function. Out of this dataset I created another dataset of numeric_attributes only in which I have numeric_attributes in an array. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python multi-processing Module. How can I parallelize a for loop in spark with scala? To subscribe to this RSS feed, copy and paste this URL into your RSS reader. The program exits the loop only after the else block is executed. How are you going to put your newfound skills to use? We are hiring!

To connect to the CLI of the Docker setup, youll need to start the container like before and then attach to that container. How are we doing? How do I iterate through two lists in parallel? Luckily, technologies such as Apache Spark, Hadoop, and others have been developed to solve this exact problem. I want to do parallel processing in for loop using pyspark. lambda functions in Python are defined inline and are limited to a single expression. Below is the PySpark equivalent: Dont worry about all the details yet. import pyspark from pyspark.sql import SparkSession spark = SparkSession.builder.appName('SparkByExamples.com').getOrCreate() [Row(trees=20, r_squared=0.8633562691646341). And as far as I know, if we have a. Connect and share knowledge within a single location that is structured and easy to search. How can a person kill a giant ape without using a weapon? Using map () to loop through DataFrame Using foreach () to loop through DataFrame How to solve this seemingly simple system of algebraic equations? Please explain why/how the commas work in this sentence. Is renormalization different to just ignoring infinite expressions? The code below shows how to try out different elastic net parameters using cross validation to select the best performing model. Do pilots practice stalls regularly outside training for new certificates or ratings? Let us see the following steps in detail. I am using for loop in my script to call a function for each element of size_DF(data frame) but it is taking lot of time. Curated by the Real Python team. But i want to pass the length of each element of size_DF to the function like this for row in size_DF: length = row[0] print "length: ", length insertDF = newObject.full_item(sc, dataBase, length, end_date), replace for loop to parallel process in pyspark. How is cursor blinking implemented in GUI terminal emulators?

Seth Thomas Wall Clock Instructions,

Ecu Student Pirate Club Guest Tickets,

Kindara Pregnancy Mode,

Articles P

The NEW Role of Women in the Entertainment Industry (and Beyond!)

The NEW Role of Women in the Entertainment Industry (and Beyond!) Harness the Power of Your Dreams for Your Career!

Harness the Power of Your Dreams for Your Career! Woke Men and Daddy Drinks

Woke Men and Daddy Drinks The power of ONE woman

The power of ONE woman How to push on… especially when you’ve experienced the absolute WORST.

How to push on… especially when you’ve experienced the absolute WORST. Your New Year Deserves a New Story

Your New Year Deserves a New Story