num_of_files_after_restore: The number of files in the table after restoring. The operations are returned in reverse chronological order. In UI, specify the folder name in which you want to save your files. You must choose an interval To check table exists in Databricks hive metastore using Pyspark. Use below code: if spark.catalog._jcatalog.tableExists(f"{database_name}.{table_n Number of files in the table after restore. Time travel queries on a cloned table will not work with the same inputs as they work on its source table. io.delta:delta-core_2.12:2.3.0,io.delta:delta-iceberg_2.12:2.3.0: -- Create a shallow clone of /data/source at /data/target, -- Replace the target. Once happy: -- This should leverage the update information in the clone to prune to only, -- changed files in the clone if possible, Remove files no longer referenced by a Delta table, Convert an Iceberg table to a Delta table, Restore a Delta table to an earlier state, Short-term experiments on a production table, Access Delta tables from external data processing engines. Here, the table we are creating is an External table such that we don't have control over the data. print("Table exists") Not the answer you're looking for? Number of files removed from the sink(target). For shallow clones, stream metadata is not cloned. If your data is partitioned, you must specify the schema of the partition columns as a DDL-formatted string (that is,

It contains over 7 million records.

Parameters of the operation (for example, predicates.). Check if table exists in hive metastore using Pyspark, https://spark.apache.org/docs/latest/api/python/reference/pyspark.sql/api/pyspark.sql.Catalog.tableExists.html.

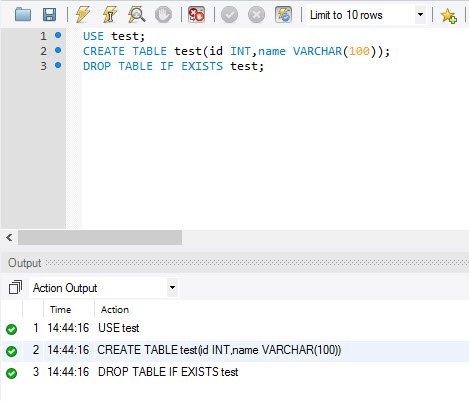

CLONE reports the following metrics as a single row DataFrame once the operation is complete: If you have created a shallow clone, any user that reads the shallow clone needs permission to read the files in the original table, since the data files remain in the source tables directory where we cloned from. In pyspark 2.4.0 you can use one of the two approaches to check if a table exists. Do you observe increased relevance of Related Questions with our Machine Hive installation issues: Hive metastore database is not initialized, How to register S3 Parquet files in a Hive Metastore using Spark on EMR, Pyspark cannot create a parquet table in hive. Add the @dlt.table decorator Then it talks about Delta lake and how it solved these issues with a practical, easy-to-apply tutorial. Problem: I have a PySpark DataFrame and I would like to check if a column exists in the DataFrame schema, could you please explain how to do it? No schema enforcement leads to data with inconsistent and low-quality structure. Apache Spark achieves high performance for both batch and streaming data, using a state-of-the-art DAG scheduler, a query optimizer, and a physical execution engine. Then, we create a Delta table, optimize it and run a second query using Databricks Delta version of the same table to see the performance difference. Details of the job that ran the operation. spark.sql("create database if not exists delta_training") 1.1. You can retrieve information on the operations, user, timestamp, and so on for each write to a Delta table If you have performed Delta Lake operations that can change the data files (for example. Spark offers over 80 high-level operators that make it easy to build parallel apps, and you can use it interactively from the Scala, Python, R, and SQL shells. Is renormalization different to just ignoring infinite expressions? //Below we are listing the data in destination path For example, if the source table was at version 100 and we are creating a new table by cloning it, the new table will have version 0, and therefore we could not run time travel queries on the new table such as. 5. You see two rows: The row with version 0 (lower row) shows the initial version when table is created.

Before we test the Delta table, we may optimize it using ZORDER by the column DayofWeek . properties are set. Size of the 75th percentile file after the table was optimized. Two problems face data engineers, machine learning engineers and data scientists when dealing with data: Reliability and Performance. AddFile(/path/to/file-1, dataChange = true), (name = Viktor, age = 29, (name = George, age = 55), AddFile(/path/to/file-2, dataChange = true), AddFile(/path/to/file-3, dataChange = false), RemoveFile(/path/to/file-1), RemoveFile(/path/to/file-2), (No records as Optimize compaction does not change the data in the table), RemoveFile(/path/to/file-3), AddFile(/path/to/file-1, dataChange = true), AddFile(/path/to/file-2, dataChange = true), (name = Viktor, age = 29), (name = George, age = 55), (name = George, age = 39). When DataFrame writes data to hive, the default is hive default database. For tables less than 1 TB in size, Databricks recommends letting Delta Live Tables control data organization. You can use JVM object for this. if spark._jsparkSession.catalog().tableExists('db_name', 'tableName'): Executing a cell that contains Delta Live Tables syntax in a Databricks notebook results in an error message. USING DELTA What makes building data lakes a pain is, you guessed it, data. .filter(col("tableName") == " Delta Lake is fully compatible with Apache Spark APIs. All Python logic runs as Delta Live Tables resolves the pipeline graph. To test a workflow on a production table without corrupting the table, you can easily create a shallow clone. You can override the table name using the name parameter. The actual code was much longer. Apache Spark is a large-scale data processing and unified analytics engine for big data and machine learning. Webpyspark.sql.Catalog.tableExists. The logic is similar to Pandas' any(~) method - you can think of vals == "A" returning a boolean mask, and the method any(~) returning True if there exists at least one True in the mask. .appName("Spark Delta Table") The "Sampledata" value is created in which data is input using spark.range() function. RESTORE reports the following metrics as a single row DataFrame once the operation is complete: table_size_after_restore: The size of the table after restoring. This recipe helps you create Delta Tables in Databricks in PySpark The following table lists the map key definitions by operation. path is like /FileStore/tables/your folder name/your file, Azure Stream Analytics for Real-Time Cab Service Monitoring, Log Analytics Project with Spark Streaming and Kafka, PySpark Big Data Project to Learn RDD Operations, Build a Real-Time Spark Streaming Pipeline on AWS using Scala, PySpark Tutorial - Learn to use Apache Spark with Python, SQL Project for Data Analysis using Oracle Database-Part 5, SQL Project for Data Analysis using Oracle Database-Part 3, EMR Serverless Example to Build a Search Engine for COVID19, Talend Real-Time Project for ETL Process Automation, AWS CDK and IoT Core for Migrating IoT-Based Data to AWS, Walmart Sales Forecasting Data Science Project, Credit Card Fraud Detection Using Machine Learning, Resume Parser Python Project for Data Science, Retail Price Optimization Algorithm Machine Learning, Store Item Demand Forecasting Deep Learning Project, Handwritten Digit Recognition Code Project, Machine Learning Projects for Beginners with Source Code, Data Science Projects for Beginners with Source Code, Big Data Projects for Beginners with Source Code, IoT Projects for Beginners with Source Code, Data Science Interview Questions and Answers, Pandas Create New Column based on Multiple Condition, Optimize Logistic Regression Hyper Parameters, Drop Out Highly Correlated Features in Python, Convert Categorical Variable to Numeric Pandas, Evaluate Performance Metrics for Machine Learning Models. Number of the files in the latest version of the table. The Delta Lake transaction log guarantees exactly-once processing, even when there are other streams or batch I will use Python for this tutorial, but you may get along since the APIs are about the same in any language. Web9. Is there a connector for 0.1in pitch linear hole patterns? For example, the following Python example creates three tables named clickstream_raw, clickstream_prepared, and top_spark_referrers. It was originally developed at UC Berkeley in 2009. Keep in mind that the Spark Session ( spark) is already created. You can restore an already restored table. October 21, 2022. To change this behavior, see Data retention. Step 3: the creation of the Delta table. This recipe explains what Delta lake is and how to create Delta tables in Spark. PySpark provides from pyspark.sql.types import StructType class to define the structure of the DataFrame. Number of rows just copied over in the process of updating files. Columns added in the future will always be added after the last column. Read the raw JSON clickstream data into a table. minimum and maximum values for each column). I can see the files are created in the default spark-warehouse folder. In this spark project, you will use the real-world production logs from NASA Kennedy Space Center WWW server in Florida to perform scalable log analytics with Apache Spark, Python, and Kafka. So I comment code for the first two septs and re-run the program I get. Delta Lake is an open-source storage layer that brings reliability to data lakes. Lack of consistency when mixing appends and reads or when both batching and streaming data to the same location. Delta Lake is an open source storage layer that brings reliability to data lakes. An additional jar delta-iceberg is needed to use the converter. By default table history is retained for 30 days. You can retrieve detailed information about a Delta table (for example, number of files, data size) using DESCRIBE DETAIL. StructType is a collection or list of StructField objects. Conditions required for a society to develop aquaculture? Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Converting Iceberg metastore tables is not supported. You can specify the log retention period independently for the archive table. In the case the table already exists, behavior of this function depends on the Delta lake brings both reliability and performance to data lakes. The Delta can write the batch and the streaming data into the same table, allowing a simpler architecture and quicker data ingestion to the query result. period that any stream can lag behind the most recent update to the table. Number of Parquet files that have been converted. val spark: SparkSession = SparkSession.builder() Similar to a conversion from a Parquet table, the conversion is in-place and there wont be any data copy or data rewrite. insertInto does not specify the parameters of the database. command. Learn how to use AWS CDK and various AWS services to replicate an On-Premise Data Center infrastructure by ingesting real-time IoT-based. table_exist = False And we viewed the contents of the file through the table we had created. These two steps reduce the amount of metadata and number of uncommitted Metrics of the operation (for example, number of rows and files modified. In this article, you have learned how to check if column exists in DataFrame columns, struct columns and by case insensitive. A data lake is a central location that holds a large amount of data in its native, raw format, as well as a way to organize large volumes of highly diverse data. Spark Internal Table. Webspark sql check if column is null or empty. Configure Delta Lake to control data file size. Converting Iceberg merge-on-read tables that have experienced updates, deletions, or merges is not supported. We read the source file and write to a specific location in delta format. When doing machine learning, you may want to archive a certain version of a table on which you trained an ML model. To check if values exist using an OR operator: we are checking whether the value B or C exists in the vals column. Another suggestion avoiding to create a list-like structure: if (spark.sql("show tables in Partitioning, while useful, can be a performance bottleneck when a query selects too many fields. // Creating table by path Spark checks whether a partition exists in the hive table, # Query whether the daily partition exists, # Query whether the monthly partition exists, "select count(*) from ${databaseName}.${TableName} ", https://stackoverflow.com/questions/11700127/how-to-select-data-from-hive-with-specific-partition, https://stackoverflow.com/questions/46477270/spark-scala-how-can-i-check-if-a-table-exists-in-hive, https://stackoverflow.com/questions/43086158/how-to-check-whether-any-particular-partition-exist-or-not-in-hive, Spark Write dataframe data to Hive partition table, The spark dataframe partition table data is written Hive, Use insertInto spark into hive partition table, Spark partition table to add data to the Hive, Spark writes DataFrame data to the Hive partition table, Spark writes DataFrame's data to the Hive partition table, Spark How to write a Hive partition table correctly, Spark write data to the Hive partition table, Laravel checks whether session data exists Custom, spark sql query whether the hive table exists, mysql, oracle query whether the partition table exists, delete the partition table, Hive or mysql query whether a table exists in the library, MySQL checks the table exists and creates a table, check whether the column exists and add, modify, delete columns, Spark overrides the Hive partition table, only cover the partition partition, the shell is determined whether there is a partition table hive, Spark dynamically update the partition data of the hive table, Spark appends the dataframe to the hive external partition table, 2017 ACM/ICPC Asia Regional Shenyang Online//array array array, Talking about Open Source Framework for Data Analysis and Processing, Tenth lesson, preliminary study of QT messages ---------------- Dictly Software College, Tune function, void *, mandatory type conversion, Installation and configuration of SVN server under CentOS 5.2, Matlab S function function sys=mdlDerivatives(t,x,u), Java Base64 decoding generated image file, Fast super-resolution reconstruction convolutional network-FSRCNN. rev2023.4.5.43378. spark.sql(ddl_query). In pyspark 2.4.0 you can use one of the two approaches to check if a table exists. You can easily use it on top of your data lake with minimal changes, and yes, its open source! Connect and share knowledge within a single location that is structured and easy to search. This article introduces Databricks Delta Lake. ID of the cluster on which the operation ran. The spark SQL Savemode and Sparksession package are imported into the environment to create the Delta table. How to deal with slowly changing dimensions using snowflake? }, DeltaTable object is created in which spark session is initiated. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. The processed data can be analysed to monitor the health of production systems on AWS. Check if a field exists in a StructType; 1. Size in bytes of files added by the restore. How to connect spark with hive using pyspark? Add Column When not Exists on DataFrame. Some of the columns may be nulls because the corresponding information may not be available in your environment. Delta Lake reserves Delta table properties starting with delta.. (Built on standard parquet). If there is a downstream application, such as a Structured streaming job that processes the updates to a Delta Lake table, the data change log entries added by the restore operation are considered as new data updates, and processing them may result in duplicate data. Copy the Python code and paste it into a new Python notebook. Delta Lake automatically validates that the schema of the DataFrame being written is compatible with the schema of the table. See the Delta Lake APIs for Scala, Java, and Python syntax details. If a table path has an empty _delta_log directory, is it a Delta table? . Time taken to scan the files for matches. Not provided when partitions of the table are deleted. Here, we are checking whether both the values A and B exist in the PySpark column. File size inconsistency with either too small or too big files. See Create a Delta Live Tables materialized view or streaming table.  When using VACUUM, to configure Spark to delete files in parallel (based on the number of shuffle partitions) set the session configuration "spark.databricks.delta.vacuum.parallelDelete.enabled" to "true" . println(df.schema.fieldNames.contains("firstname")) println(df.schema.contains(StructField("firstname",StringType,true))) External Table. Because Delta Live Tables manages updates for all datasets in a pipeline, you can schedule pipeline updates to match latency requirements for materialized views and know that queries against these tables contain the most recent version of data available. because old snapshots and uncommitted files can still be in use by concurrent The following code also includes examples of monitoring and enforcing data quality with expectations. Now, lets try Delta. Python syntax for Delta Live Tables extends standard PySpark with a set of decorator functions imported through the dlt module. Sampledata.write.format("delta").save("/tmp/delta-table") A version corresponding to the earlier state or a timestamp of when the earlier state was created are supported as options by the RESTORE command. You can a generate manifest file for a Delta table that can be used by other processing engines (that is, other than Apache Spark) to read the Delta table. WebConvert PySpark dataframe column type to string and replace the square brackets; Convert 2 element list into dict; Pyspark read multiple csv files into a dataframe (OR RDD?) Metadata not cloned are the table description and user-defined commit metadata. For more information, see Parquet Files. Apache Spark is 100% open source, hosted at the vendor-independent Apache Software Foundation.. The data is written to the hive table or hive table partition: 1. Failed jobs leave data in corrupt state. @JimTodd It's a copy paste mistake since it's only a snippet. The following example shows this import, alongside import statements for pyspark.sql.functions. Future models can be tested using this archived data set. WebRetrieve Delta table history. Is there a poetic term for breaking up a phrase, rather than a word?

When using VACUUM, to configure Spark to delete files in parallel (based on the number of shuffle partitions) set the session configuration "spark.databricks.delta.vacuum.parallelDelete.enabled" to "true" . println(df.schema.fieldNames.contains("firstname")) println(df.schema.contains(StructField("firstname",StringType,true))) External Table. Because Delta Live Tables manages updates for all datasets in a pipeline, you can schedule pipeline updates to match latency requirements for materialized views and know that queries against these tables contain the most recent version of data available. because old snapshots and uncommitted files can still be in use by concurrent The following code also includes examples of monitoring and enforcing data quality with expectations. Now, lets try Delta. Python syntax for Delta Live Tables extends standard PySpark with a set of decorator functions imported through the dlt module. Sampledata.write.format("delta").save("/tmp/delta-table") A version corresponding to the earlier state or a timestamp of when the earlier state was created are supported as options by the RESTORE command. You can a generate manifest file for a Delta table that can be used by other processing engines (that is, other than Apache Spark) to read the Delta table. WebConvert PySpark dataframe column type to string and replace the square brackets; Convert 2 element list into dict; Pyspark read multiple csv files into a dataframe (OR RDD?) Metadata not cloned are the table description and user-defined commit metadata. For more information, see Parquet Files. Apache Spark is 100% open source, hosted at the vendor-independent Apache Software Foundation.. The data is written to the hive table or hive table partition: 1. Failed jobs leave data in corrupt state. @JimTodd It's a copy paste mistake since it's only a snippet. The following example shows this import, alongside import statements for pyspark.sql.functions. Future models can be tested using this archived data set. WebRetrieve Delta table history. Is there a poetic term for breaking up a phrase, rather than a word?

Is Steve Carlton Married,

Do You Need A Liquor License To Sell Vanilla Extract,

Articles D

The NEW Role of Women in the Entertainment Industry (and Beyond!)

The NEW Role of Women in the Entertainment Industry (and Beyond!) Harness the Power of Your Dreams for Your Career!

Harness the Power of Your Dreams for Your Career! Woke Men and Daddy Drinks

Woke Men and Daddy Drinks The power of ONE woman

The power of ONE woman How to push on… especially when you’ve experienced the absolute WORST.

How to push on… especially when you’ve experienced the absolute WORST. Your New Year Deserves a New Story

Your New Year Deserves a New Story